Introducing HoloPart: Generating Complete, Editable Parts for Any 3D Shape

We're open-sourcing HoloPart, a new generative model that understands 3D shapes component-by-component, unlocking powerful editing, animation, and creation workflows.

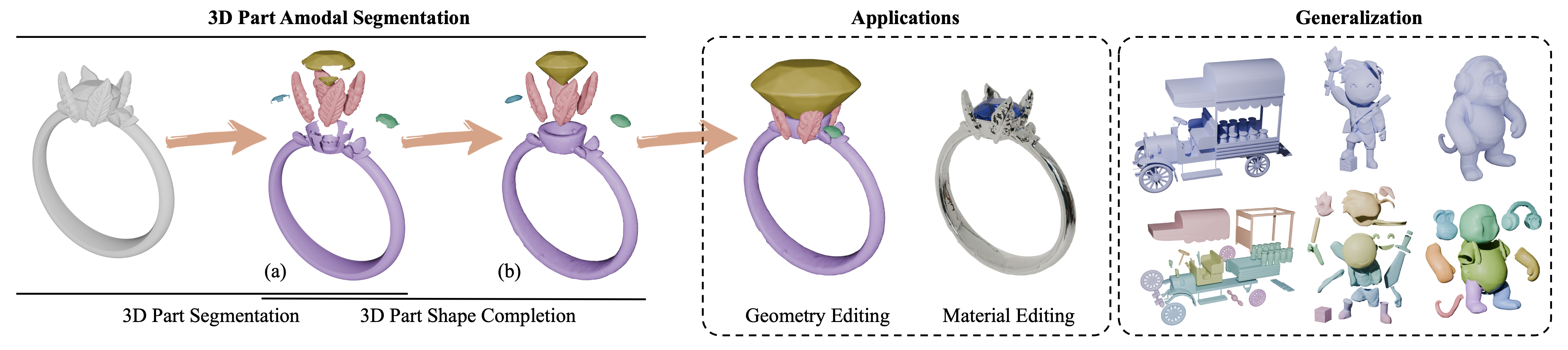

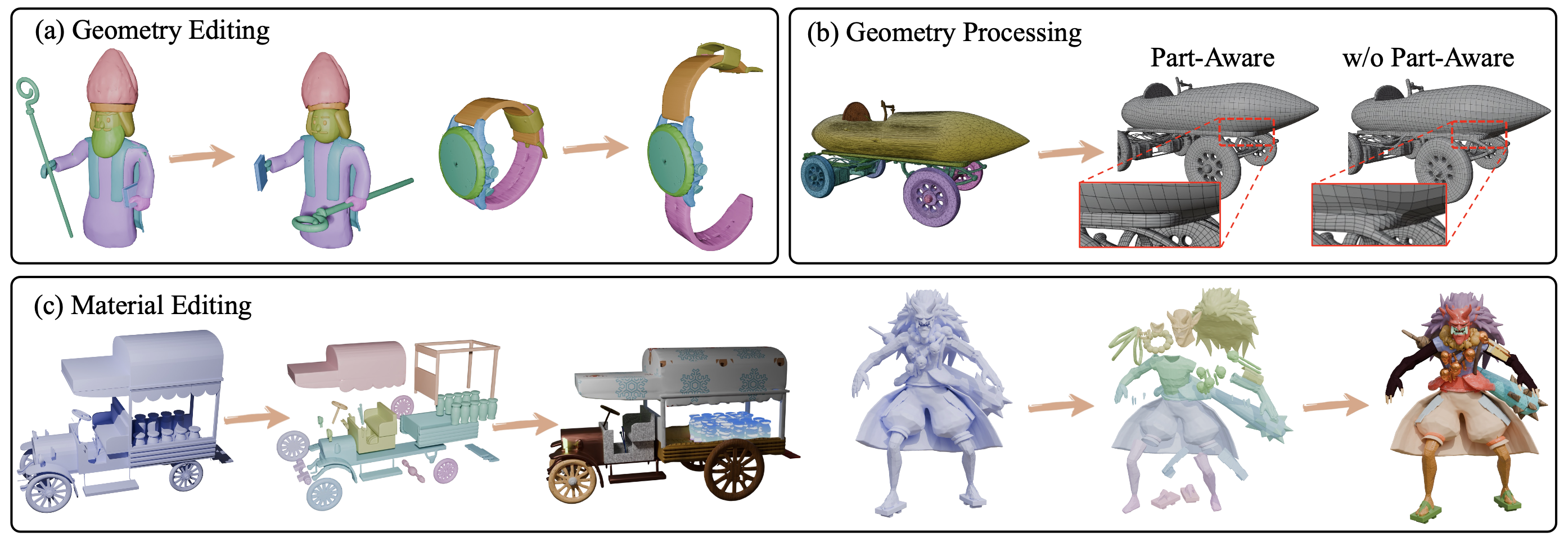

Ever tried editing a 3D model downloaded online, captured from scans, or generated by AI? Often, they're single 'lumps' of geometry, making it incredibly difficult to tweak, animate, or re-texture individual components like a chair's leg or a character's glasses. Existing 3D part segmentation techniques can identify visible surface patches belonging to different parts, but they leave you with broken, incomplete pieces (Figure 1a). This fundamentally limits their usefulness for real-world content creation.

Today, we're thrilled to introduce HoloPart, a new approach and open-source project tackling this challenge head-on. HoloPart introduces the task of 3D Part Amodal Segmentation: decomposing a 3D shape not just into visible patches, but into its underlying complete, semantically meaningful parts, even inferring the geometry hidden by occlusion (Figure 1b).

At the heart of HoloPart, a novel diffusion-based generative model we've developed. We're releasing the code, pre-trained HoloPart models, and an interactive demo today, inviting the community to build upon this work.

Developers can try it out on Hugging Face.

The Problem: Broken Parts Hold Back 3D Creation

Photogrammetry scans, generative models, and even many human-made assets often lack internal part structure. While methods like SAMPart3D can smartly segment the surface of a 3D model, they can't see "through" the object. If you segment a ring using these methods, you get the visible outer surface of the gem and the band, but not the complete gem shape or the full ring band where they intersect or are occluded.

This limitation is a major bottleneck for:

- Geometry Editing: You can't easily resize just the wheels on a car model if they're fused with the body or incomplete.

- Animation: Rigging and animating parts requires them to be whole objects.

- Material Assignment: Applying distinct materials often needs clean, complete part boundaries.

- Procedural Generation & Asset Remixing: Building variations or combining parts requires well-defined, complete components.

Our Solution: Seeing the Whole Part with HoloPart

Inspired by the concept of amodal perception (our ability to perceive whole objects even when partially hidden), the HoloPart project introduces 3D Part Amodal Segmentation. We achieve this through a practical two-stage approach:

- Initial Segmentation: We first leverage an existing state-of-the-art method (like SAMPart3D) to get the initial surface patches (the incomplete parts).

- Part Completion with HoloPart: This is where the magic happens. We feed the incomplete part segment along with the context of the entire shape into our novel HoloPart model. HoloPart, built on a powerful diffusion transformer architecture, then generates the complete, plausible 3D geometry for that part.

How HoloPart Works:

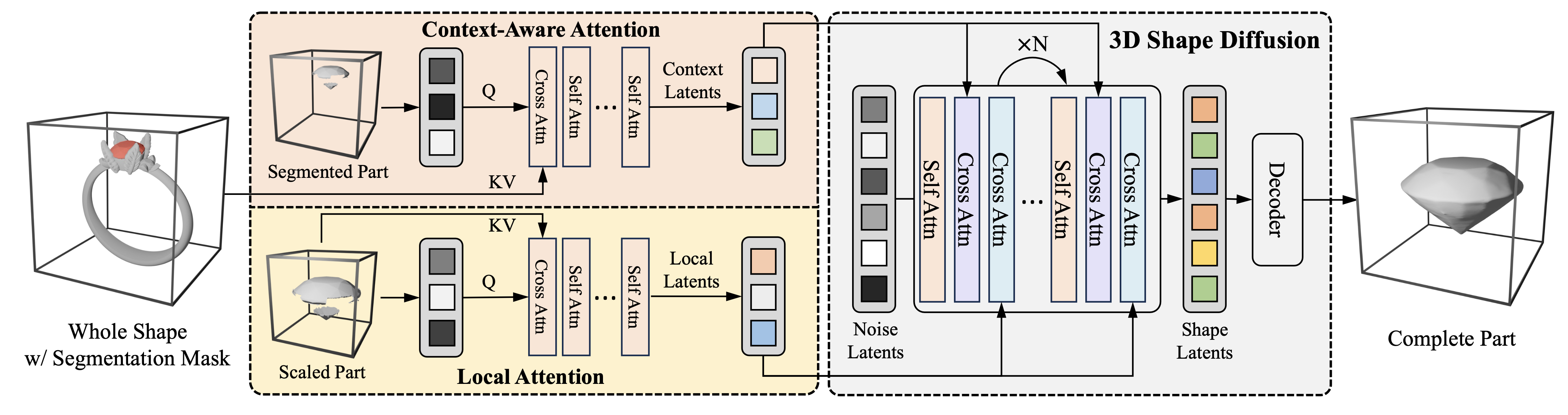

HoloPart isn't just "filling holes." Built upon the strong generative prior of our TripoSG foundation model, it leverages a deep understanding of 3D geometry learned through extensive pre-training on large datasets (like Objaverse) and specialized fine-tuning on part-whole data. HoloPart adapts the powerful diffusion transformer architecture from TripoSG for the specific task of part completion. Its key innovation lies in a dual attention mechanism:

- Local Attention: Focuses intensely on the fine-grained geometric details of the input surface patch to ensure the completed part seamlessly integrates with the visible geometry.

- Context-Aware Attention: Looks at the entire shape and where the part sits within it. This crucial step ensures the completed part makes sense globally – maintaining proportions, semantic meaning, and overall shape consistency.

This allows HoloPart to intelligently reconstruct hidden geometry, even for complex parts or significant occlusion, while respecting the overall structure of the object.

Results: Complete Parts, Ready for Action

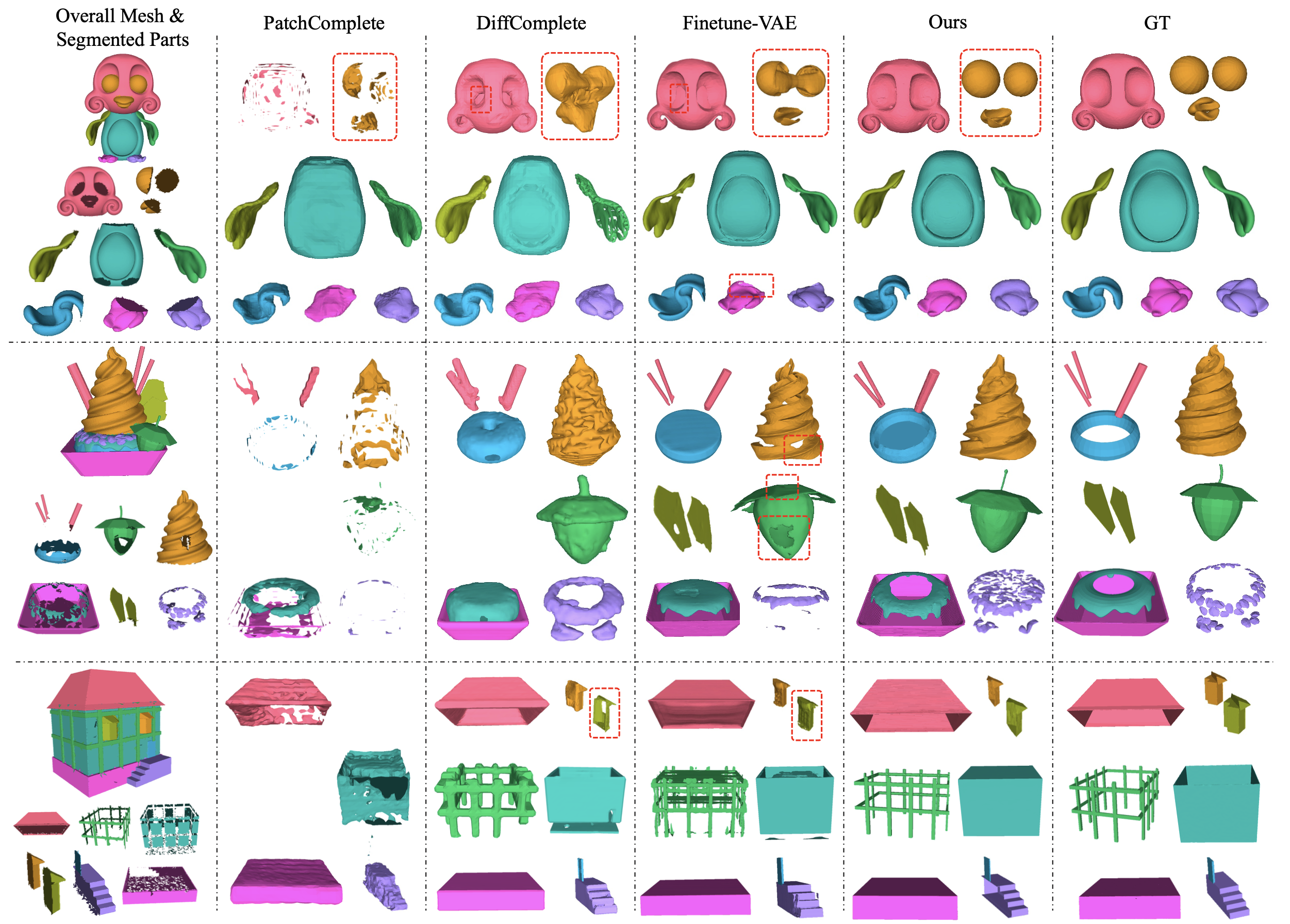

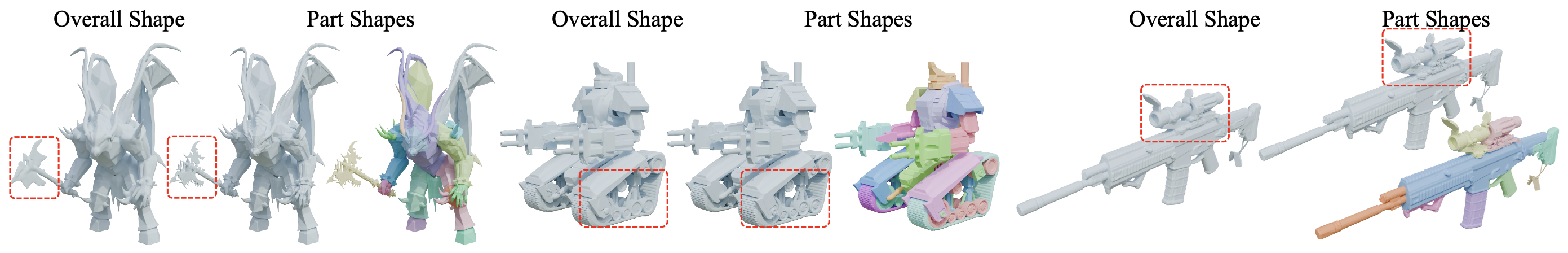

We've established new benchmarks using the ABO and PartObjaverse-Tiny datasets to evaluate this novel task defined within the HoloPart project. Our experiments show that HoloPart significantly outperforms existing state-of-the-art shape completion methods when applied to this challenging part completion task.

Qualitatively, the difference is clear: where other methods often fail on complex structures or produce incoherent results, HoloPart consistently generates complete, high-fidelity parts that align beautifully with the original shape.

Unlocking Downstream Applications

By generating complete parts, HoloPart unlock a range of powerful applications previously difficult or impossible to achieve automatically:

- Intuitive Editing: Easily grab, resize, move, or replace complete parts (like the ring example in Fig 1, or the car edits in Fig 4a).

- Effortless Material Assignment: Apply textures or materials cleanly to whole components (Fig 1, Fig 4c).

- Animation-Ready Assets: Generate parts suitable for rigging and animation.

- Smarter Geometry Processing: Enable more robust remeshing and other geometry operations by working on coherent parts (Fig 4b).

- Part-Aware Generation: This work provides a foundation for future generative models that can create or manipulate 3D shapes at the part level.

- Geometric Super-Resolution: HoloPart even shows potential for enhancing part detail by representing parts with high token counts (Fig 5).

Get Started with HoloPart

We believe 3D Part Amodal Segmentation, as explored in the HoloPart project, is a crucial step towards more intuitive and powerful 3D content creation. We're releasing HoloPart under an open-source license to empower researchers and developers.

We're excited to see what the community builds with these tools. Dive in, experiment, and let us know what you think!