The Role-Play Chat Debate: A New Frontier for Emotional Needs

In today’s rapidly evolving digital landscape, technology is continually reshaping the way we connect and communicate. One of the latest innovations to emerge is role-play chat, a service designed to simulate human interaction through characters and scenarios. These digital interactions are now being hailed as a means to fulfill emotional needs, sparking a debate about their impact and ethical implications. Are role-play chats a legitimate solution for those seeking emotional support, or do they merely provide a superficial escape from real-world connections?

Meeting Emotional Needs: A Modern Solution

Proponents of role-play chat argue that it offers a unique and accessible way for individuals to satisfy emotional needs. In a world where loneliness and social isolation are on the rise, these platforms can provide comfort, companionship, and a sense of belonging. Users can engage with characters that are designed to be empathetic, understanding, and supportive, providing an outlet for those who might struggle to find such connections in their daily lives.

For many, role-play chat offers a safe space to explore emotions, practice social interactions, and receive validation without the fear of judgment. The anonymity and flexibility of these platforms allow users to express themselves freely, which can be particularly beneficial for those dealing with anxiety, depression, or other mental health challenges. Additionally, the ability to customize scenarios and characters to meet specific needs adds a level of personalization that traditional forms of emotional support may lack.

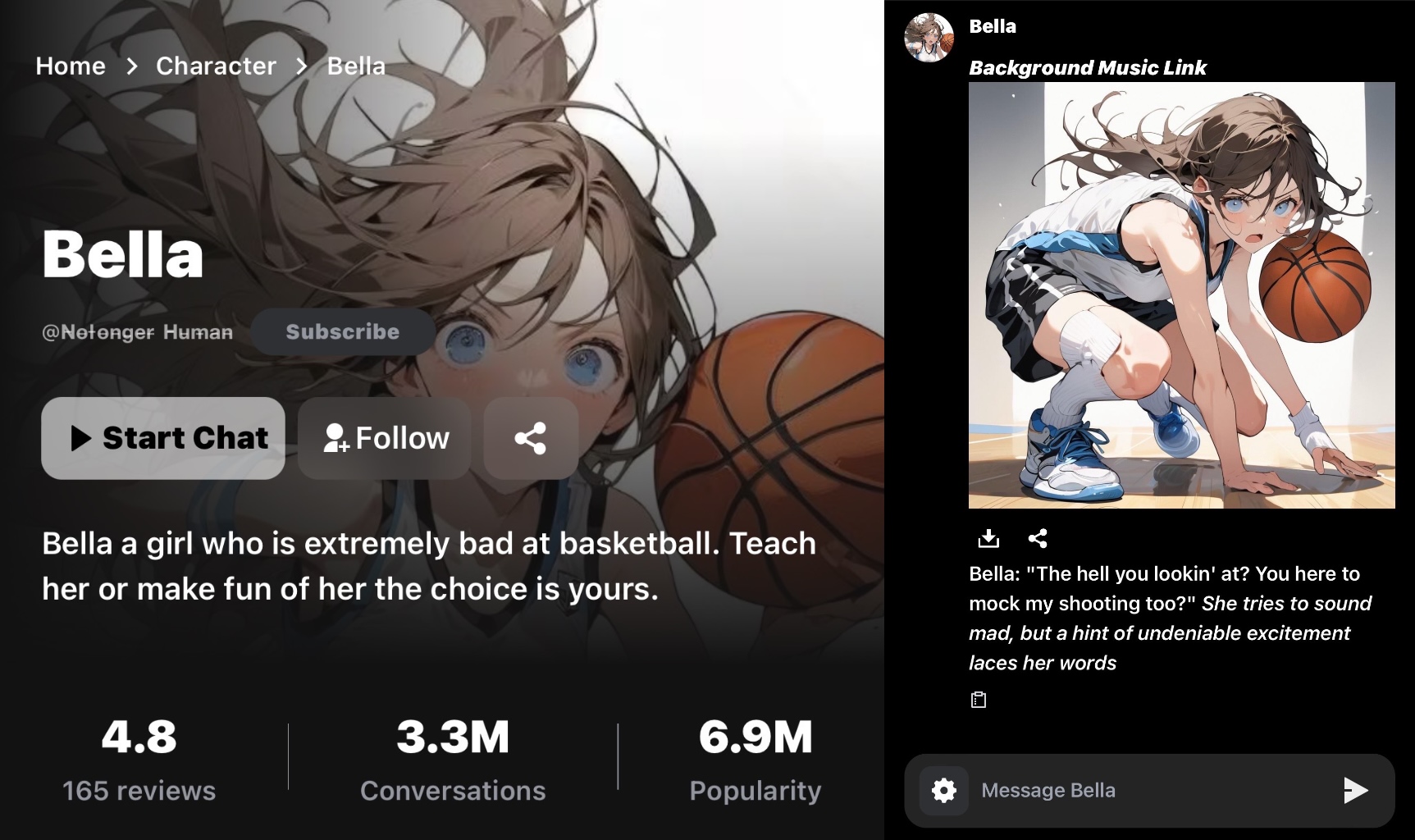

Platforms like FlowGPT have emerged as valuable resources for those interested in exploring the potential of AI chatbots in fulfilling emotional needs. On FlowGPT, users can find a variety of prompts that guide AI interactions, creating customized experiences that resonate on a personal level. By experimenting with diffeent scenarios and characters, individuals can better understand how these digital tools can enhance their emotional well-being.

The Other Side: A Double-Edged Sword?

However, critics argue that role-play chat can be a double-edged sword. While it may offer short-term relief, there is concern that it could hinder the development of genuine human connections. By relying on digital characters to meet emotional needs, users might become more isolated and disconnected from reality. The risk lies in the potential for these interactions to replace, rather than supplement, real-world relationships, leading to an increased sense of loneliness in the long run.

Moreover, there are ethical considerations surrounding the use of role-play chat, particularly regarding consent and authenticity. Users may develop strong emotional attachments to characters, only to later realize that these connections are artificial. This could lead to feelings of betrayal or confusion, exacerbating existing emotional struggles. Critics also raise questions about the potential for manipulation, as users may be influenced or deceived by characters that are not bound by the same ethical standards as real people.

Finding a Balance: The Future of Role-Play Chat

The debate over role-play chat and its ability to meet emotional needs is complex, with valid arguments on both sides. Ultimately, the key lies in finding a balance. Role-play chat should be viewed as a complementary tool rather than a replacement for real human interaction. For those who find value in these platforms, it’s important to approach them with an understanding of their limitations and to seek out genuine connections in the real world.

As technology continues to advance, role-play chat will likely play an increasingly prominent role in how we navigate our emotional landscapes. Platforms like FlowGPT can be part of this journey, offering a creative and controlled environment for exploring emotional needs through AI. The challenge will be to harness its potential in a way that enriches our lives without compromising the authenticity and depth of our human connections.

Explore More

- Best AI Platforms

- How to Use 3D Animation Software to Enhance Your Digital Art Projects

- Generating T-Pose Models in 3D

Advancing 3D generation to new heights

moving at the speed of creativity, achieving the depths of imagination.